Decision Trees

Decision Trees are a popular supervised machine learning algorithm used for both classification and regression tasks. They provide a tree-like model of decisions and their possible consequences, including outcomes, costs, and utility.

1. Introduction to Decision Trees

- Definition: A Decision Tree is a flowchart-like structure where an internal node represents a feature (attribute), the branch represents a decision rule, and each leaf node represents an outcome (class label or value).

- Use Cases: Used in various domains like finance, healthcare, marketing, and more for tasks such as classification, regression, and decision analysis.

Key Characteristics:

- Easy to interpret and visualize.

- Handles both numerical and categorical data.

- Requires little data preprocessing (no need for normalization or scaling).

- Prone to overfitting, especially with deep trees.

2. Structure of a Decision Tree

Components:

- Root Node: Represents the entire dataset and the first feature split.

- Internal Nodes: Represent splits based on a specific feature and a threshold or condition.

- Branches: Represent the outcome of a split decision.

- Leaf Nodes: Represent the final output (class label or regression value).

3. Algorithmic Steps

-

Select the Best Split: Choose the feature and threshold that best separates the data. Common criteria include:

- Gini Index (used in classification tasks)

- Information Gain / Entropy (used in classification tasks)

- Mean Squared Error (used in regression tasks)

-

Split the Dataset: Divide the dataset based on the selected feature and threshold.

-

Repeat: Recursively apply the splitting process to the subsets until a stopping condition is met (e.g., maximum depth or minimum samples per node).

-

Assign Leaf Values: Assign the class label (classification) or the mean value (regression) to the leaf nodes.

4. Splitting Criteria

(a) Gini Index

- Measures the impurity of a node.Lower values indicate better splits.

- Formula:

where (p_i) is the proportion of instances of class (i).

- A lower Gini Index indicates a better split.

(b) Entropy and Information Gain

- Entropy:

- Information Gain:

(c) Mean Squared Error (MSE) for Regression

- Measures the variance within a node.

- Formula:

where is the true value and is the mean value.

5. Advantages and Disadvantages

Advantages:

- Simple to understand and interpret.

- Handles both numerical and categorical data.

- Requires minimal data preprocessing.

- Can handle non-linear relationships.

Disadvantages:

- Prone to overfitting (solved using pruning or ensemble methods).

- Sensitive to small changes in data (can lead to different trees).

- Can be biased towards features with more levels or unique values.

6. Practical Techniques

(a) Pruning

- Reduces the size of a decision tree to avoid overfitting.

- Types:

- Pre-pruning: Stop growing the tree early (e.g., max depth, min samples per split).

- Post-pruning: Remove branches from a fully grown tree based on performance.

(b) Handling Missing Values

- Impute missing values or use substitute splits.

(c) Feature Importance

- Measures the contribution of each feature to the decision-making process.

- Computed based on the reduction in impurity.

7. Variants of Decision Trees

- Classification Trees: Used for categorical outcomes.

- Regression Trees: Used for continuous outcomes.

- CART (Classification and Regression Tree): A framework for building both types of trees.

8. Common Use Cases

- Credit Risk Analysis

- Disease Diagnosis

- Customer Segmentation

- Predicting House Prices

- Game Theory and Strategy Building

9. Advanced Topics

(a) Ensemble Methods

- Combine multiple decision trees to improve accuracy and robustness.

- Types:

- Random Forest: Combines multiple trees trained on random subsets of features and samples.

- Gradient Boosting: Builds trees sequentially, with each tree correcting errors of the previous ones.

(b) Hyperparameter Tuning

- Key hyperparameters:

- Max Depth

- Min Samples Split

- Min Samples Leaf

- Max Features

- Use Grid Search or Random Search for optimization.

8. Implementation in Python

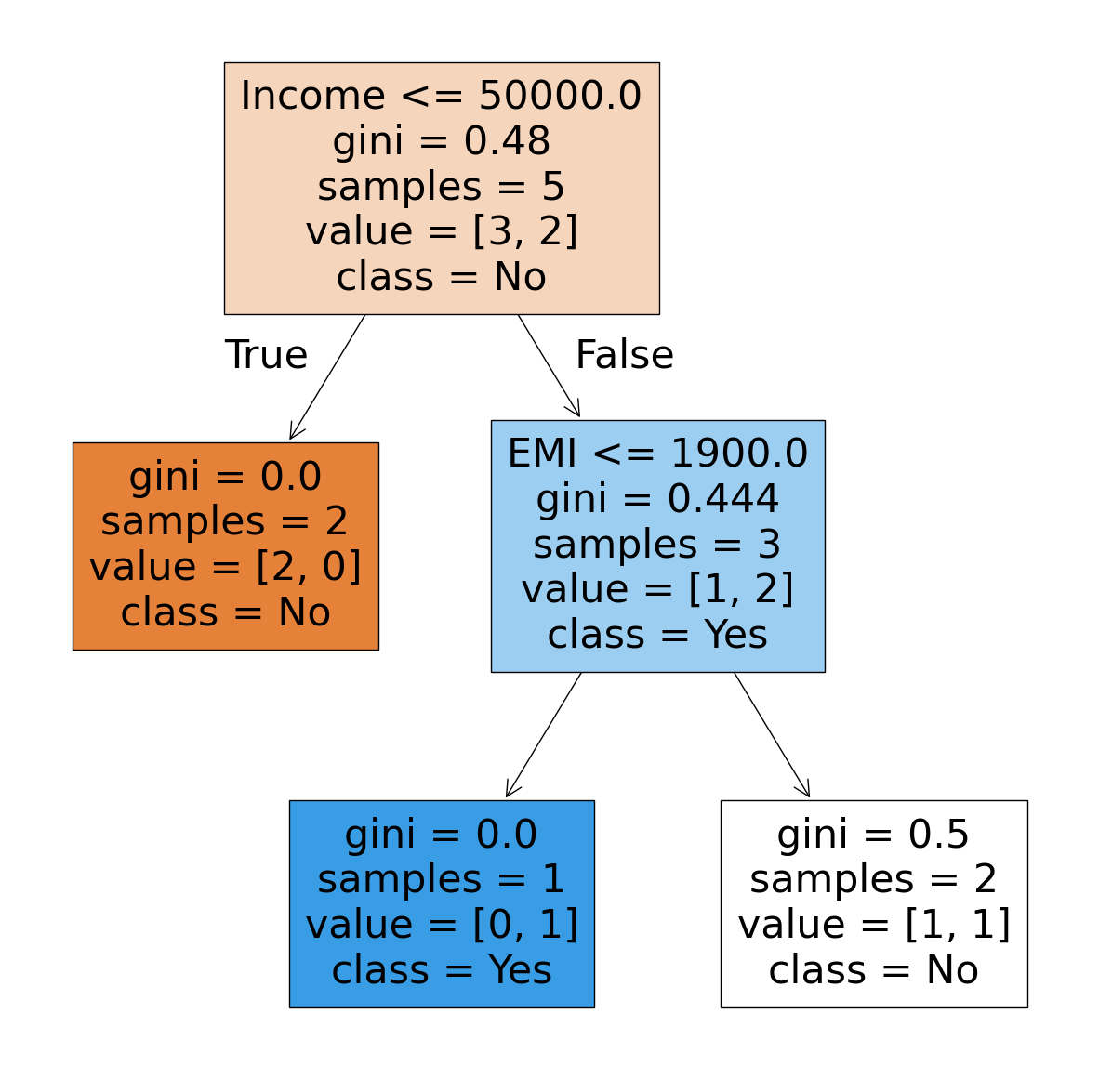

Here’s a simple and clear example of a Decision Tree Classifier using a small, straightforward dataset to make it easy to understand:

Example: Predicting if a Person is Likely to Buy a Product

We will use a small dataset where the features are Age and Income, and the target is whether the person will buy the product (Yes or No).

from sklearn.tree import DecisionTreeClassifier, plot_tree

import pandas as pd

import matplotlib.pyplot as plt

# Example Dataset

data = {

"Age": [25, 45, 35, 50, 23],

"Income": [40000, 80000, 60000, 120000, 35000],

"EMI": [1500, 2000, 1800, 2500, 1200],

"Will_Buy": ["No", "no", "Yes", "Yes", "No"]

}

df = pd.DataFrame(data)

# Ensure target classes are consistent

df["Will_Buy"] = df["Will_Buy"].str.lower()

# Features (X) and Target (y)

X = df[["Age", "Income","EMI"]]

y = df["Will_Buy"]

# Decision Tree with Gini Index

clf = DecisionTreeClassifier(criterion="gini", max_depth=2, random_state=42)

clf.fit(X, y)

# Visualize the Decision Tree

plt.figure(figsize=(15, 15))

plot_tree(clf, filled=True, feature_names=["Age", "Income","EMI"], class_names=["No", "Yes"])

plt.show()

Explanation:

-

Dataset:

- Small dataset with just 5 examples for simplicity.

- Features:

Age,Income. - Target:

Will_Buy(YesorNo).

-

Model:

- A

DecisionTreeClassifierwithmax_depth=2is trained on the data to keep the tree simple.

- A

-

Visualization:

- The decision tree is plotted using

plot_treeto show how the splits are made.

- The decision tree is plotted using

-

Prediction:

- The model predicts for a new person (e.g., Age = 30, Income = 50000).

Output:

This example demonstrates how a decision tree splits the data based on features to make predictions, keeping the logic easy to follow.